μC/OS-II介绍

是由Micrium ( Micrium’s headquarters in Weston, Florida ) 公司研发的实时操作系统(RTOS),公司网站其提供操作系统的源代码,商业用途需要付费。

公司网址:http://micrium.com

(以下信息来源于Micrium官网的μC/OS-II User Manual)

μC/OS-II pronounced “Micro C OS 2”, which stands for Micro-Controller Operating System Version 2.

μC/OS-II的第一个版本发布与1992年。

μC/OS-II的特点是满足安全关键系统的需求。

Meets the requirements of Safety Critical Systems.

其他译法:安全攸关系统、安全苛求系统

应用领域

系统概念

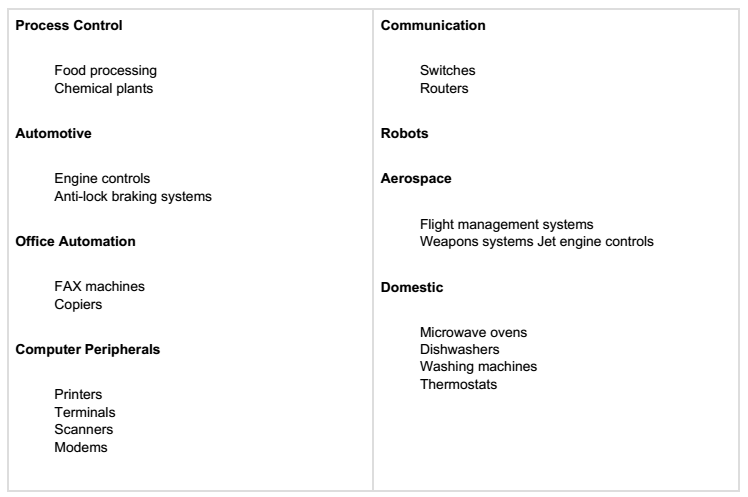

Foreground/Background Systems(前台/后台系统)

(图)

Critical Section of Code(关键代码区)

不能被中断的代码

A critical section of code, also called a critical region, is code that needs to be treated indivisibly. Once the section of code starts executing, it must not be interrupted.

Resource(资源)

A resource is any entity used by a task. A resource can thus be an I/O device, such as a printer, a keyboard, or a display, or a variable, a structure, or an array.

Shared Resource(共享资源)

多任务带来的问题,从而产生响应的解决方案——互斥。

A shared resource is a resource that can be used by more than one task. Each task should gain exclusive access to the shared resource to prevent data corruption. This is called mutual exclusion.

Multitasking(多任务执行)

多任务执行就是在不同任务之间调度和交替使用CPU。

Multitasking is the process of scheduling and switching the CPU (Central Processing Unit) between several tasks; a single CPU switches its attention between several sequential tasks.

Task(任务)

任务,也称线程。每个任务用于独立的CPU寄存器和堆栈区域。

A task, also called a thread, is a simple program that thinks it has the CPU all to itself. The design process for a real-time application involves splitting the work to be done into tasks responsible for a portion of the problem. Each task is assigned a priority, its own set of CPU registers, and its own stack area.

Context Switch (or Task Switch)(上下文切换)

When a multitasking kernel decides to run a different task, it simply saves the current task’s context (CPU registers) in the current task’s context storage area — its stack.

Kernel(内核)

The kernel is the part of a multitasking system responsible for the management of tasks (i.e., for managing the CPU’s time) and communication between tasks. The fundamental service provided by the kernel is context switching. The use of a real-time kernel generally simplifies the design of systems by allowing the application to be divided into multiple tasks managed by the kernel.

A kernel adds overhead to your system because the services provided by the kernel require execution time. The amount of overhead depends on how often you invoke these services. In a well designed application, a kernel will use up between 2 and 5% of CPU time. Because a kernel is software that gets added to your application, it requires extra ROM (code space) and additional RAM for the kernel data structures and, each task requires its own stack space, which has a tendency to eat up RAM quickly.

Scheduler(调度器)

The scheduler, also called the dispatcher, is the part of the kernel responsible for determining which task will run next. Most real-time kernels are priority based. Each task is assigned a priority based on its importance.

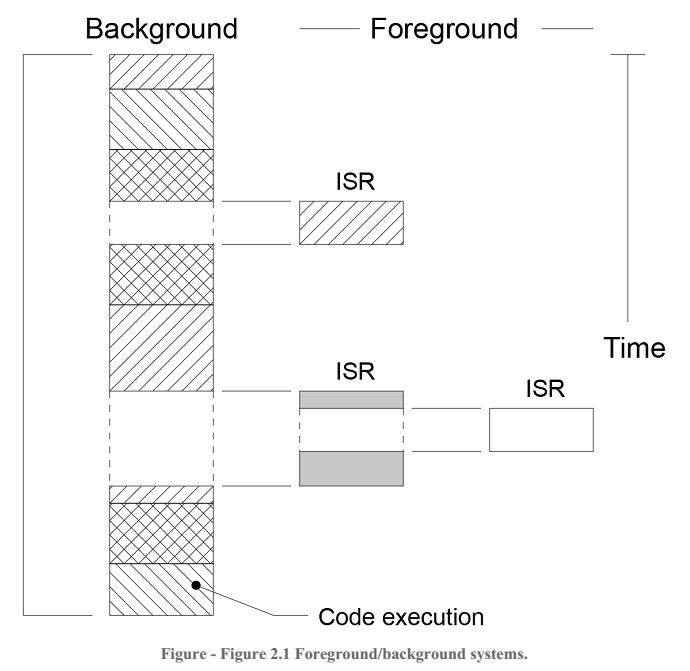

Non-Preemptive Kernel(非抢占式内核、无优先内核)

每个任务需要明确地放弃对CPU的控制。

(图)

Non-preemptive kernels require that each task does something to explicitly give up control of the CPU. To maintain the illusion of concurrency, this process must be done frequently. Non-preemptive scheduling is also called cooperative multitasking; tasks cooperate with each other to share the CPU. Asynchronous events are still handled by ISRs. An ISR can make a higher priority task ready to run, but the ISR always returns to the interrupted task. The new higher priority task will gain control of the CPU only when the current task gives up the CPU.

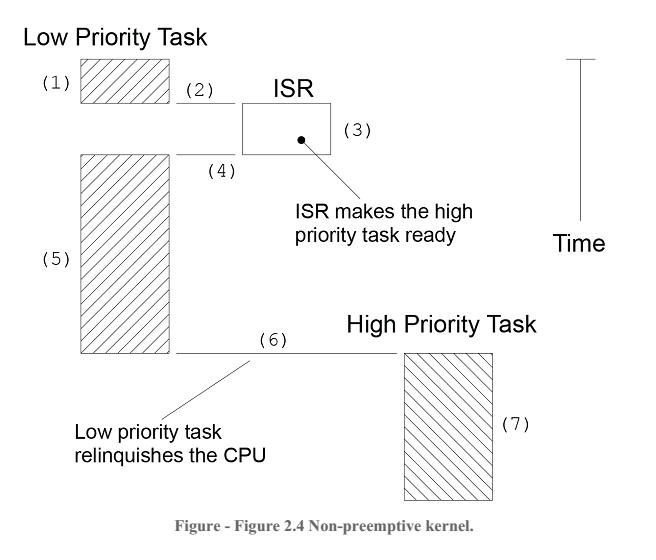

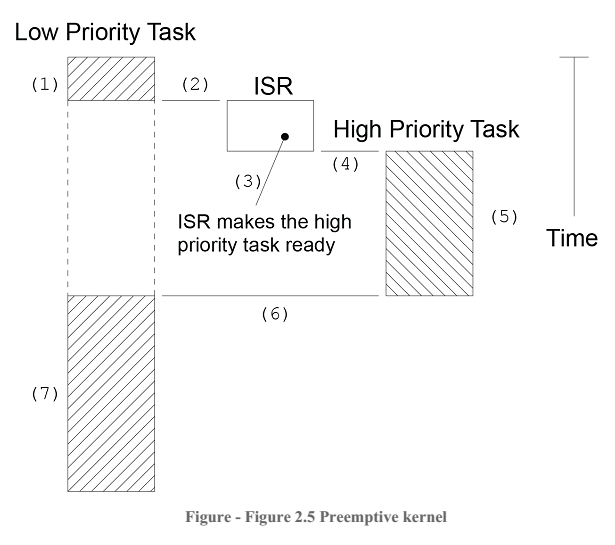

Preemptive Kernel(抢占式内核)

高优先级的先执行。

(图)

A preemptive kernel is used when system responsiveness is important. Because of this, µC/OS-II and most commercial real-time kernels are preemptive. The highest priority task ready to run is always given control of the CPU. When a task makes a higher priority task ready to run, the current task is preempted (suspended) and the higher priority task is immediately given control of the CPU. If an ISR makes a higher priority task ready, when the ISR completes, the interrupted task is suspended and the new higher priority task is resumed.

Reentrancy(重入性)

可重入函数可以被两个以上任务调用,而不会产生数据冲突。

A reentrant function can be used by more than one task without fear of data corruption. A reentrant function can be interrupted at any time and resumed at a later time without loss of data. Reentrant functions either use local variables (i.e., CPU registers or variables on the stack) or protect data when global variables are used.

例1:

void strcpy(char *dest, char *src)

{

while (*dest++ = *src++) {

;

}

*dest = NUL;

}

Because copies of the arguments to strcpy() are placed on the task’s stack, strcpy() can be invoked by multiple tasks without fear that the tasks will corrupt each other’s pointers.

例2:不可重入

int Temp;

void swap(int *x, int *y)

{

Temp = *x;

*x = *y;

*y = Temp;

}

Round-Robin Scheduling(轮询调度算法)

相同优先级的任务,内核将按时间片分配每个任务执行。

When two or more tasks have the same priority, the kernel allows one task to run for a predetermined amount of time, called a quantum, then selects another task. This is also called time slicing. The kernel gives control to the next task in line if the current task has no work to do during its time slice or the current task completes before the end of its time slice or the time slice ends.

µC/OS-II does not currently support round-robin scheduling. Each task must have a unique priority in your application.

Task Priority(任务优先权)

A priority is assigned to each task. The more important the task, the higher the priority given to it. With most kernels, you are generally responsible for deciding what priority each task gets.

Static Priorities

任务优先权在执行期间不能被改变。

Task priorities are said to be static when the priority of each task does not change during the application’s execution. Each task is thus given a fixed priority at compile time. All the tasks and their timing constraints are known at compile time in a system where priorities are static.

Dynamic Priorities

任务优先权在执行期间可以被改变。

Task priorities are said to be dynamic if the priority of tasks can be changed during the application’s execution; each task can change its priority at run time. This is a desirable feature to have in a real-time kernel to avoid priority inversions.

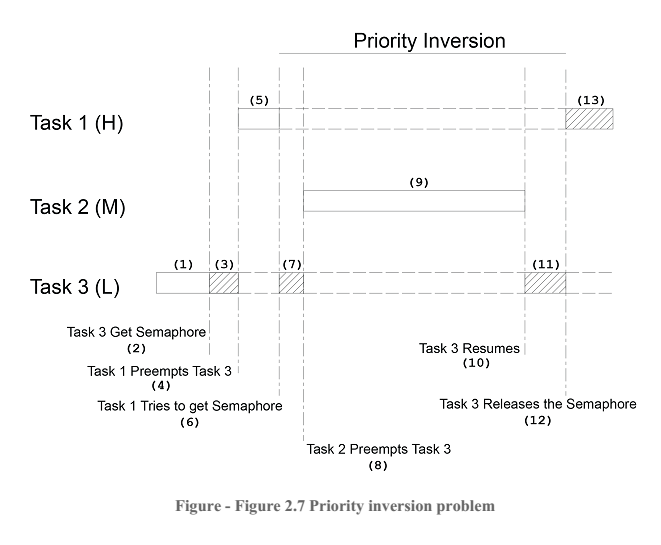

Priority Inversions(优先权反转)

Priority inversion is a problem in real-time systems and occurs mostly when you use a real-time kernel.

例子:

(图)

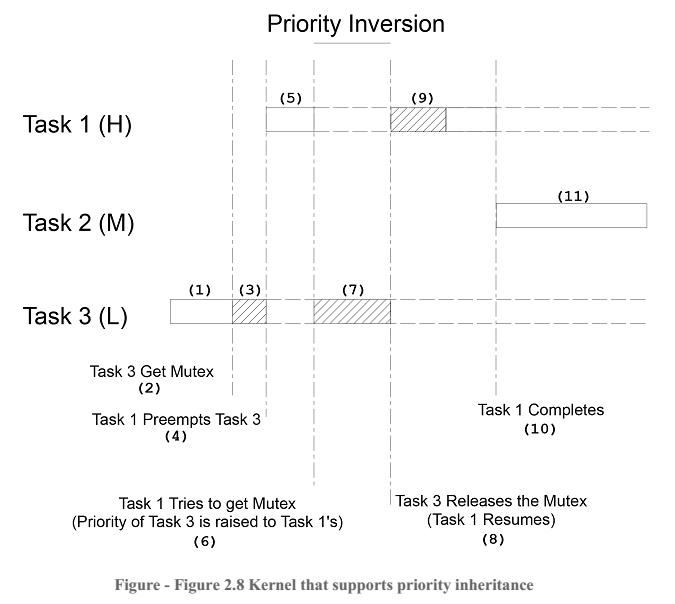

μC/OS-II采用优先权继承的策略解决此问题。

A multitasking kernel should allow task priorities to change dynamically to help prevent priority inversions. However, it takes some time to change a task’s priority. What if Task 3 had completed access of the resource before it was preempted by Task 1 and then by Task 2? Had you raised the priority of Task 3 before accessing the resource and then lowered it back when done, you would have wasted valuable CPU time. What is really needed to avoid priority inversion is a kernel that changes the priority of a task automatically. This is called priority inheritance and µC/OS-II provides this feature.

(图)

Assigning Task Priorities(分配任务优先权)

Assigning task priorities is not a trivial undertaking because of the complex nature of real-time systems. In most systems, not all tasks are considered critical. Noncritical tasks should obviously be given low priorities. Most real-time systems have a combination of SOFT and HARD requirements. In a SOFT real-time system, tasks are performed as quickly as possible, but they don’t have to finish by specific times. In HARD real-time systems, tasks have to be performed not only correctly, but on time.

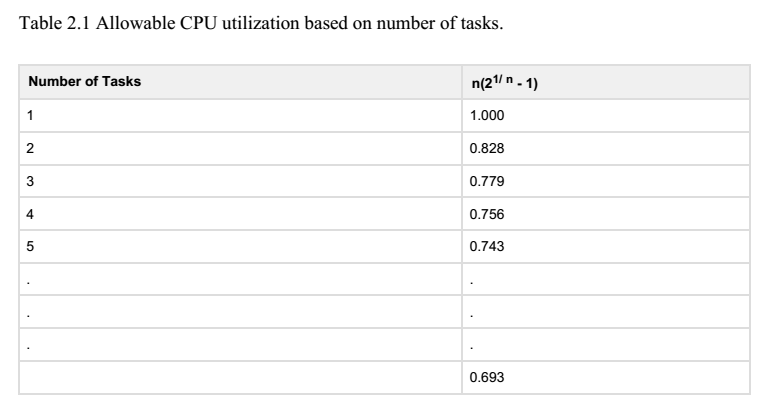

Rate Monotonic Scheduling (RMS)(单调速率调度算法)

In computer science, rate-monotonic scheduling (RMS) is a scheduling algorithm used in real-time operating systems (RTOS) with a static-priority scheduling class.The static priorities are assigned on the basis of the cycle duration of the job: the shorter the cycle duration is, the higher is the job's priority.

RMS makes a number of assumptions:

All tasks are periodic (they occur at regular intervals).

Tasks do not synchronize with one another, share resources, or exchange data.

The CPU must always execute the highest priority task that is ready to run. In other words, preemptive scheduling must be used.

Liu, C. L.; Layland, J. (1973), "Scheduling algorithms for multiprogramming in a hard real-time environment", Journal of the ACM 20 (1): 46–61, doi:10.1145/321738.321743.

这篇文章证明了以下事情:

a set of n periodic tasks with unique periods, a feasible schedule that will always meet deadlines exists if the CPU utilization is below a specific bound (depending on the number of tasks). The schedulability test for RMS is:

where, Ci corresponds to the maximum execution time of task i and Ti corresponds to the execution period of task i. In other words, Ci/Ti corresponds to the fraction of CPU time required to execute task i. The upper bound for an infinite number of tasks is given by ln(2), or 0.693. This means that to meet all HARD real-time deadlines based on RMS, CPU utilization of all time-critical tasks should be less than 70 percent!

(图)

)

)

Mutual Exclusion(互斥)

The easiest way for tasks to communicate with each other is through shared data structures. This is especially easy when all tasks exist in a single address space and can reference global variables, pointers, buffers, linked lists, ring buffers, etc. Although sharing data simplifies the exchange of information, you must ensure that each task has exclusive access to the data to avoid contention and data corruption. The most common methods of obtaining exclusive access to shared resources are:

disabling interrupts

performing test-and-set operations

disabling scheduling

using semaphores(信号量).

disabling interrupts(中断不使能)

伪代码如下:

Disable interrupts;

Access the resource (read/write from/to variables);

Reenable interrupts;

μc/os的实现:

void Function (void)

{

OS_ENTER_CRITICAL();

/* You can access shared data in here */

OS_EXIT_CRITICAL();

}

test-and-set operations(测试-设置操作)

If you are not using a kernel, two functions could ‘agree’ that to access a resource, they must check a global variable and if the variable is 0, the function has access to the resource. To prevent the other function from accessing the resource, however, the first function that gets the resource simply sets the variable to 1. This is commonly called a Test-And-Set (or TAS) operation.

disabling scheduling(调度不使能)

If your task is not sharing variables or data structures with an ISR, you can disable and enable scheduling . In this case, two or more tasks can share data without the possibility of contention. You should note that while the scheduler is locked, interrupts are enabled, and if an interrupt occurs while in the critical section, the ISR is executed immediately. At the end of the ISR, the kernel always returns to the interrupted task, even if a higher priority task has been made ready to run by the ISR.

μc/os例子

void Function (void)

{

OSSchedLock();

. . /* You can access shared data in here (interrupts are recognized) */

OSSchedUnlock();

}

semaphores(信号量)

The semaphore was invented by Edgser Dijkstra in the mid-1960s. It is a protocol mechanism offered by most multitasking kernels. Semaphores are used to control access to a shared resource (mutual exclusion), signal the occurrence of an event, and allow two tasks to synchronize their activities.

μc/os的例子:

OS_EVENT *SharedDataSem;

void Function (void)

{

INT8U err;

OSSemPend(SharedDataSem, 0, &err);

. . /* You can access shared data in here (interrupts are recognized) */

OSSemPost(SharedDataSem);

}

Deadlock (or Deadly Embrace)(死锁)

两个任务相互等待一个共享资源的释放。

A deadlock, also called a deadly embrace, is a situation in which two tasks are each unknowingly waiting for resources held by the other.

举例:

任务A获得变量x的共享锁,任务B获得变量y的共享锁,任务A执行中等待y的共享锁,任务B执行中等待获得x的共享锁。

Synchronization(同步)

任务可以使用信号量来达成同步。

A task can be synchronized with an ISR (or another task when no data is being exchanged) by using a semaphore.

Event Flags(事件标志)

当一个任务需要与多个事件发生同步时,使用事件标志。

Event flags are used when a task needs to synchronize with the occurrence of multiple events. The task can be synchronized when any of the events have occurred. This is called disjunctive synchronization (logical OR). A task can also be synchronized when all events have occurred. This is called conjunctive synchronization (logical AND).

Kernels like µC/OS-II which support event flags offer services to SET event flags, CLEAR event flags, and WAIT for event flags (conjunctively or disjunctively).

Intertask Communication(任务见通信)

任务间通信可以使用全局变量或发送消息。

It is sometimes necessary for a task or an ISR to communicate information to another task. This information transfer is called intertask communication. Information may be communicated between tasks in two ways: through global data or by sending messages.

Message Mailboxes(消息邮箱)

消息邮箱也称消息交换,通过内核提供服务。

Messages can be sent to a task through kernel services. A Message Mailbox, also called a message exchange, is typically a pointer-size variable. Through a service provided by the kernel, a task or an ISR can deposit a message (the pointer) into this mailbox. Similarly, one or more tasks can receive messages through a service provided by the kernel. Both the sending task and receiving task agree on what the pointer is actually pointing to.

Message Queues(消息队列)

A message queue is used to send one or more messages to a task. A message queue is basically an array of mailboxes. Through a service provided by the kernel, a task or an ISR can deposit a message (the pointer) into a message queue. Similarly, one or more tasks can receive messages through a service provided by the kernel. Both the sending task and receiving task or tasks have to agree as to what the pointer is actually pointing to. Generally, the first message inserted in the queue will be the first message extracted from the queue (FIFO). In addition, to extract messages in a FIFO fashion, µC/OS-II allows a task to get messages Last-In-First-Out (LIFO).

Interrupts(中断)

An interrupt is a hardware mechanism used to inform the CPU that an asynchronous event has occurred. When an interrupt is recognized, the CPU saves part (or all) of its context (i.e., registers) and jumps to a special subroutine called an Interrupt Service Routine, or ISR.

Interrupt Latency(中断延时)

Probably the most important specification of a real-time kernel is the amount of time interrupts are disabled. All real-time systems disable interrupts to manipulate critical sections of code and reenable interrupts when the critical section has executed. The longer interrupts are disabled, the higher the interrupt latency.

Interrupt Response(中断响应)

Interrupt recovery is defined as the time required for the processor to return to the interrupted code or to a higher priority task in the case of a preemptive kernel. Interrupt recovery in a foreground/background system simply involves restoring the processor’s context and returning to the interrupted task.

Interrupt Recovery

Interrupt Latency, Response, and Recovery

ISR Processing Time

Nonmaskable Interrupts (NMIs)

Sometimes, an interrupt must be serviced as quickly as possible and cannot afford to have the latency imposed by a kernel. In these situations, you may be able to use the Nonmaskable Interrupt (NMI) provided on most microprocessors. Because the NMI cannot be disabled, interrupt latency, response, and recovery are minimal.

Clock Tick(时钟节拍)

时钟节拍是一个特殊中断,是系统的心跳。

A clock tick is a special interrupt that occurs periodically. This interrupt can be viewed as the system’s heartbeat. The time between interrupts is application specific and is generally between 10 and 200ms. The clock tick interrupt allows a kernel to delay tasks for an integral number of clock ticks and to provide timeouts when tasks are waiting for events to occur. The faster the tick rate, the higher the overhead imposed on the system.

Memory Requirements

ROM和RAM的确定,RAM的确定要考虑到每个任务的堆栈以及中断嵌套等因素。